Unmasking Deepfake Technology: What You Need to Know

Introduction:

Deepfake technology has been making waves in the digital landscape, offering both awe-inspiring possibilities and raising serious concerns. In this blog, we’ll delve into what deepfake technology is, explore its use cases, highlight potential dangers, and provide insights on how to detect if a video has been manipulated using deepfake technology.

What is Deep Fake Technology?

Deepfake technology harnesses the power of artificial intelligence and machine learning to create hyper-realistic manipulated videos and audio recordings. By training algorithms on vast datasets, these tools can swap faces, mimic voices, and generate content that can be indistinguishable from authentic media.

Deepfake technology refers to the use of artificial intelligence (AI) and machine learning algorithms to create realistic and often deceptive manipulated media, typically in the form of videos or audio recordings. The term “deepfake” is derived from “deep learning” and “fake.”

Key characteristics of deepfake technology include:

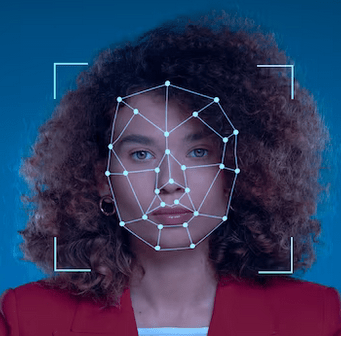

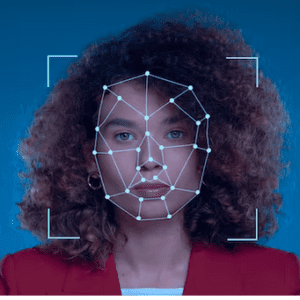

- Face Swapping: Deepfakes can replace the original face in a video with a different face, making it appear as though the person in the video is someone else. This is achieved by training algorithms on large datasets of images and videos to learn facial features and expressions.

- Voice Synthesis: Some deepfake applications extend beyond visual manipulation to include the synthesis of realistic-sounding voices. By analyzing and mimicking speech patterns, these technologies can make it seem like a person is saying things they never did.

- Realistic Content: One of the main challenges of deepfake technology is creating content that is difficult to distinguish from genuine media. Advanced algorithms and neural networks contribute to the creation of highly convincing deepfakes.

- Potential Misuse: While deepfake technology has creative and entertainment applications, it also raises concerns about its potential for misuse. It can be exploited to create fake news, false evidence, or malicious content that can harm reputations and spread misinformation.

- Detection Challenges: Detecting deepfakes can be challenging because the technology is continually evolving, and deepfakes can be highly convincing. Researchers and technology developers are also working on developing tools for detecting manipulated content.

Use Cases of Deepfake Technology:

1. Entertainment:

Deepfake technology is often used for entertainment purposes, allowing filmmakers and content creators to seamlessly incorporate actors into scenes or bring historical figures back to life.

2. Dubbing and Localization:

It enables the dubbing and localization of content, making it possible to synchronize audio and visual elements across different languages and regions.

3. Research and Simulation:

In research and simulation environments, deepfake technology can be applied to recreate realistic scenarios for training purposes, such as medical simulations or disaster response training.

Dangers of Deepfake Technology:

1. Spread of Misinformation:

One of the significant concerns is the potential misuse of deepfake technology to create and spread misinformation, fake news, or defamatory content.

2. Identity Theft:

Deepfakes can be used maliciously for identity theft, as they make it challenging to discern between genuine and manipulated content.

3. Privacy Invasion:

Individuals may find themselves unwittingly featured in manipulated content, leading to privacy concerns and reputational damage.

How to Detect Deep Fake Videos:

1. Facial and Vocal Inconsistencies:

Pay attention to subtle facial and vocal inconsistencies in the video, such as unnatural movements, mismatched lip-syncing, or unusual facial expressions.

2. Eye Contact and Blinking:

Deepfake algorithms may struggle with realistic eye movements and blinking, so closely observe these aspects to identify potential manipulation.

3. Artifacts and Glitches:

Look for visual artifacts, anomalies, or glitches in the video, as these can be indicators of manipulation.

4. Source Verification:

Verify the source of the video and cross-reference it with other reliable sources to ensure authenticity.

Conclusion:

As deepfaketechnology continues to evolve, it’s crucial to stay informed about its capabilities, applications, and potential risks. By being vigilant and employing these detection methods, we can better navigate the digital landscape and distinguish between genuine and manipulated content.

Frequently Asked Questions:

Q1: What is deepfaketechnology? A1: Deepfaketechnology uses artificial intelligence and machine learning to create realistic but fake videos or audio recordings by swapping faces, mimicking voices, or generating content that closely resembles authentic media.

Q2: How is deepfaketechnology created? A2: Deepfaketechnology is created by training algorithms on large datasets of images or audio recordings, enabling the AI to learn and replicate facial features, expressions, and speech patterns.

Q3: What are the common use cases of deepfake technology? A3: Common use cases include entertainment, dubbing, localization, research simulations, and training scenarios. However, there are concerns about potential misuse for misinformation and identity theft.

Q4: How can deepfake videos be detected? A4: Detection involves looking for facial and vocal inconsistencies, unnatural eye movements, blinking patterns, visual artifacts, and glitches. Source verification and cross-referencing with reliable sources also aid in detection.

Q5: What are the dangers of deepfake technology? A5: Dangers include the spread of misinformation, identity theft, privacy invasion, and reputational damage. Deepfakes can be used maliciously to deceive and manipulate public opinion.

Q6: Can deepfaketechnology be used for positive purposes? A6: Yes, deepfaketechnology has positive applications in entertainment, filmmaking, dubbing, and research simulations. However, ethical considerations and responsible use are essential.

Q7: Are there legal implications for creating and sharing deepfakes? A7: Yes, creating and sharing deepfakes without consent can have legal consequences. It may violate privacy laws, intellectual property rights, and laws against defamation.

Q8: How can individuals protect themselves from deepfake threats? A8: Individuals can protect themselves by being vigilant about the content they consume, verifying sources, and staying informed about the existence of deepfake technology.

Q9: Can deepfake detection technology keep up with evolving deepfake techniques? A9: Detection technology is continually evolving, but it faces challenges as deepfake techniques advance. Researchers are actively developing more sophisticated tools to counter emerging threats.

Q10: What role does digital literacy play in combating deepfake threats? A10: Digital literacy is crucial for recognizing potential deepfake content, understanding the risks, and adopting critical thinking skills to navigate the digital landscape responsibly. Education and awareness are key elements in combating deepfake threats.